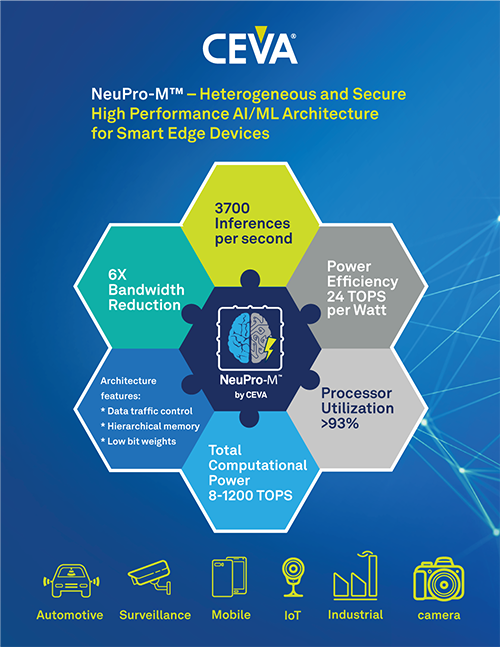

• 3rd generation NeuPro AI/ML architecture provides scalable performance from 20 to 1,200 TOPS at the SoC and chiplet level, reducing memory bandwidth consumption by a factor of six

• Aims for widespread use of AI/ML processing in automotive, industrial, 5G networks and mobile phones, surveillance cameras and edge computing

CEVA, the world's leading licensor of wireless connectivity and smart sensing technologies and integrated IP solutions (NASDAQ: CEVA), announced the NeuPro- M. NeuPro-M is composed of multiple dedicated coprocessors and configurable hardware accelerators. It is a heterogeneous processor architecture aimed at the broad edge AI and edge computing markets. It can seamlessly handle various tasks of deep neural networks at the same time, with superior performance. A generation of products increased by 5 to 15 times. NeuPro-M is an industry first with support for System-on-Chip (SoC) and Heterogeneous SoC (HSoC) scalability up to 1,200 TOPS with optional robust secure boot and end-to-end data privacy features.

The NeuPro¨CM series processors initially contain the following preconfigured cores:

• NPM11 ¨C a single NeuPro-M engine with up to 20 TOPS at 1.25GHz

• NPM18 ¨C 8 NeuPro-M engines with up to 160 TOPS at 1.25GHz

When processing ResNet50 convolutional neural networks, a single NPM11 core can boost performance up to five times that of the previous generation and reduce memory bandwidth consumption by six times, resulting in outstanding power efficiency of up to 24 TOPS/W, perfectly reflecting its industry-leading performance level.

Building on the successful previous generation, NeuPro-M is able to handle all known neural network architectures and integrates native support for next-generation networks such as transformers, 3D convolution, self-attention and all types of recurrent neural networks. NeuPro-M is optimized to handle over 250 neural networks, over 450 AI cores and over 50 algorithms. Embedded Vector Processing Units (VPUs) ensure software-based validation and support for future new neural network topologies and AI processing efforts. Also, for common benchmarks, CDNN offline compression tools can improve NeuPro-M's FPS/Watt performance by 5 to 10 times with minimal impact on accuracy.

Ran Snir, vice president and general manager of the Vision Business Unit at CEVA, commented: "As more data is generated, and sensor-related software workloads continue to migrate to neural networks for better performance and efficiency, there is an increasing need for edge AI and edge The AI and ML processing requirements for computing are growing at an alarming rate. As the power budgets of these devices remain the same, we must find innovative ways to use AI at the edge of these increasingly complex systems. The NeuPro-M architecture was designed using extensive experience deploying AI processors and accelerators in devices such as smartphones, security cameras, smartphones, and automotive system applications. NeuPro-M's innovative distributed architecture and shared memory system controller reduce bandwidth consumption and latency to a minimum, and provides excellent overall utilization and power efficiency. This allows our customers to connect multiple NeuPro-M compatible cores in an SoC or chiplet for the most demanding AI jobs, bringing intelligent edge processing to device design to a whole new level.¡±

he NeuPro-M heterogeneous architecture consists of function-specific co-processors and load balancing mechanisms, which are important factors in achieving a huge leap in performance and efficiency compared to the previous generation. By assigning control functions to local controllers and implementing local memory resources in a hierarchical fashion, NeuPro-M achieves flexibility in processing data streams, enabling over 90% utilization and preventing different protocols at any given time. The processor and accelerator are running out of data. It enables the CDNN framework to implement various data flow scenarios for optimal load balancing based on the specific network, required bandwidth, available memory, and target performance.

NeuPro-M architectural highlights include:

• Main grid array of 4K MACs (multiply-accumulate units) with mixed precision from 2 to 16 bits

• Winogradtransform engine for weights and activation operations that reduces convolution time by a factor of two and allows 8-bit convolution processing with an accuracy reduction of less than 0.5%

• Sparsity engine to avoid zero-valued weights or activations per layer, improving performance by up to four times while consuming less memory bandwidth and lower power consumption

• Features a fully programmable vector processing unit to handle new unsupported neural network architectures (with all data types) from 32-bit floating point to 2-bit binary neural network (BNN)

• Compresses configurable weight data to two bits, and decompresses on-the-fly when reading memory to reduce memory bandwidth consumption

• Minimizes data transfer power consumption to and from external SDRAM using a dynamically configured two-level memory architecture

Using innovative features in the NeuPro-M architecture, along with the Winograd transform quadrature mechanism, the Sparsity engine, and low-resolution 4x4-bit activation, reduces the number of loops for networks such as Resnet50 and Yolo V3 by more than three times.

As neural network weights and biases, as well as datasets and network topologies, become important intellectual property for owners, there is an urgent need to protect this information from unauthorized use. The NeuPro-M architecture supports secure access with optional root of trust, authentication and encryption accelerators.

For the automotive market, CEVA offers the NeuPro-M core and its CEVA Deep Neural Network (CDNN) deep learning compiler and software toolkit, which not only meet the automotive ISO26262 ASIL-B functional safety standard, but also meet the stringent quality assurance standards IATF16949 and A- Spice requests.

Combined with CEVA's award-winning neural network compiler CDNN and its powerful software development environment, the NeuPro-M architecture provides customers with a fully programmable hardware/software AI development environment to maximize AI operational performance. CDNN includes innovative software that takes full advantage of the customer's NeuPro-M custom hardware to optimize power consumption, performance and bandwidth. CDNN software also includes a memory manager for reducing memory consumption and optimizing load balancing algorithms, and supports a wide variety of network formats (including ONNX, Caffe, TensorFlow, TensorFlow Lite, Pytorch, etc.). CDNNs are compatible with common open source frameworks (including Glow, tvm, Halide, and TensorFlow) and include model optimization features such as "layer fusion" and "post training quantization" while using precise conservation method.

CEVA currently licenses NeuPro-M to major customers and will provide a full license in the second quarter of this year. CEVA also provides heterogeneous SoC design services to customers, helping them with system integration and supporting system design and chiplet development for the benefit of NeuPro-M customers.